Security Admin Console:

Information Architecture

Creating a More Usable Navigation for Security Admins in a Cybersecurity WebApp

🔎 Research Categories: Exploratory, Evaluative

📝 Project Type: Information Architecture

🕵️♀️ Role/Contribution: Research Lead, Information Architect, UX Writer, UX Strategist

🗓️ Timeline: 3 months

🛠️ Relevant Tools: Optimal Workshop, FigJam, Figma, Amplitude

🤝 Cross-Functional Team: UX Researcher, UX Manager, UX Designer, Product Manager

👥 Stakeholder Teams: UX, Product, Engineering, Support, Sales, Product Marketing, Marketing

🔒 Users: 3K+ monthly active users (targeting security admins)

*Note: The visuals on this page came from my final UX Research report and presentation and are meant to showcase my research reporting ability.

🎯 Business Outcomes:

Reduce the number of customer success/service calls related to this admin console by developing a more intuitive admin console organization that decreases the frequency of navigation-related support tickets and enables customers to find what they need without assistance

Increased feature adoption rates by creating a more logical information architecture that highlights the full capabilities of the platform, ensuring customers are aware of and can easily access valuable features they're already paying for but may not be utilizing

🥅 High-Level Research Objectives:

Understand who currently uses the product and how many of those users are admins

Gather information from various stakeholders to determine the product's future objectives

Learn how security admins want and expect a cybersecurity product’s admin console to be organized

Background

Problem Statement

As our admin console continues to grow over time, we need to better understand the mental model of security admins in order to create a navigation that is more usable for them.

Hypothesis

We can make our product more usable for security admins by focusing on and updating the information architecture. We believe that usage will increase if the product is more usable and valuable for our users.

Research Goals

Understand the specific functionalities that are missing for people in the product currently.

Uncover the types of people who want to use the product UI vs. API and why.

Discover which admin tools security admins like and dislike using and why.

Key Insights

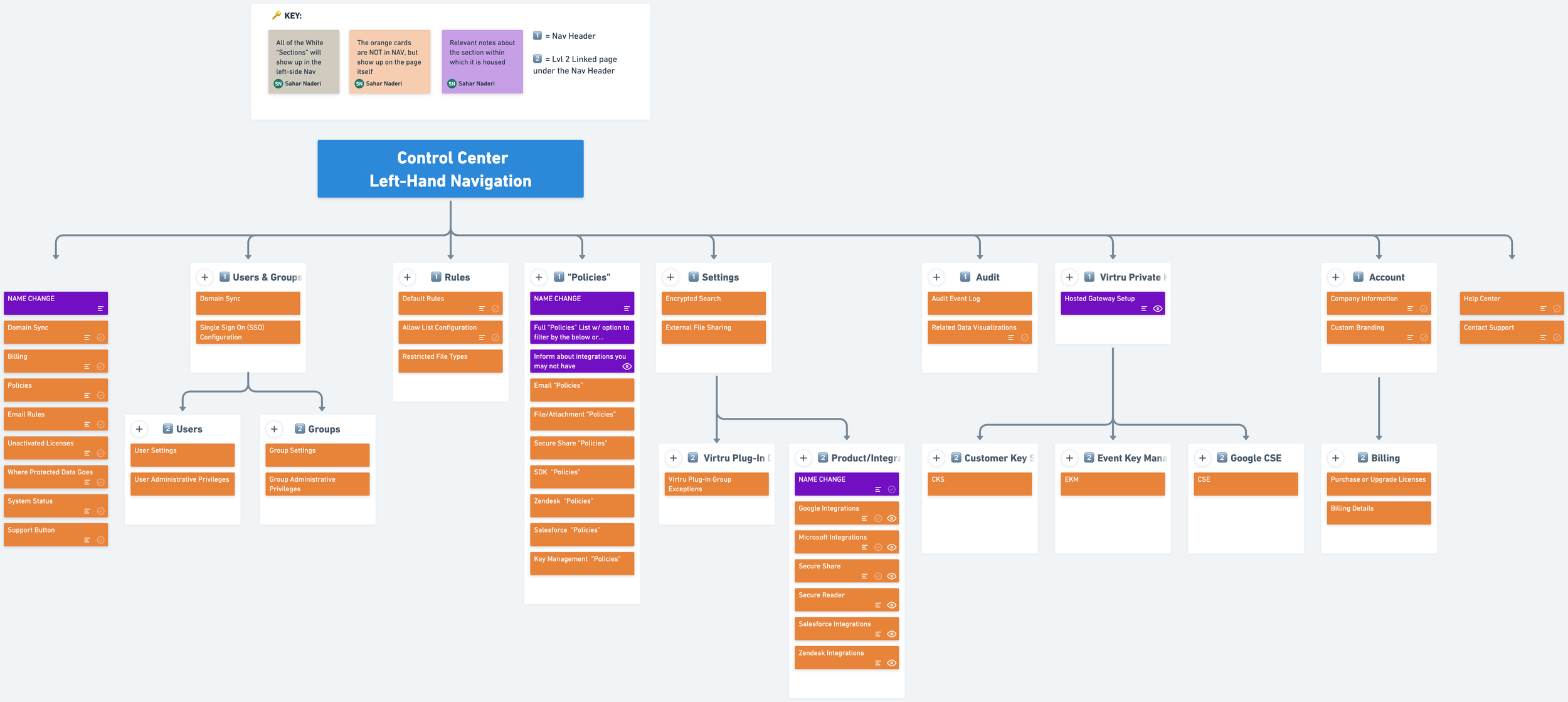

Dissecting the current sitemap and comparing it against our initial round of card sorting (dendogram)

A dashboard will provide security admins with an overview and quick links to the most used sections of the product (uncovered through research), making it easier for them to navigate and manage user licenses.

The current iteration of the product does not have a dashboard. However, the product will continue to grow over time, with many features being added in the future.

A majority of customers interviewed currently use the product for the following: monitoring capabilities, usage data, user activations, etc.

A majority of customers interviewed were excited to see the following new desired features being added to the product: billing, data visualizations, dashboard, etc.

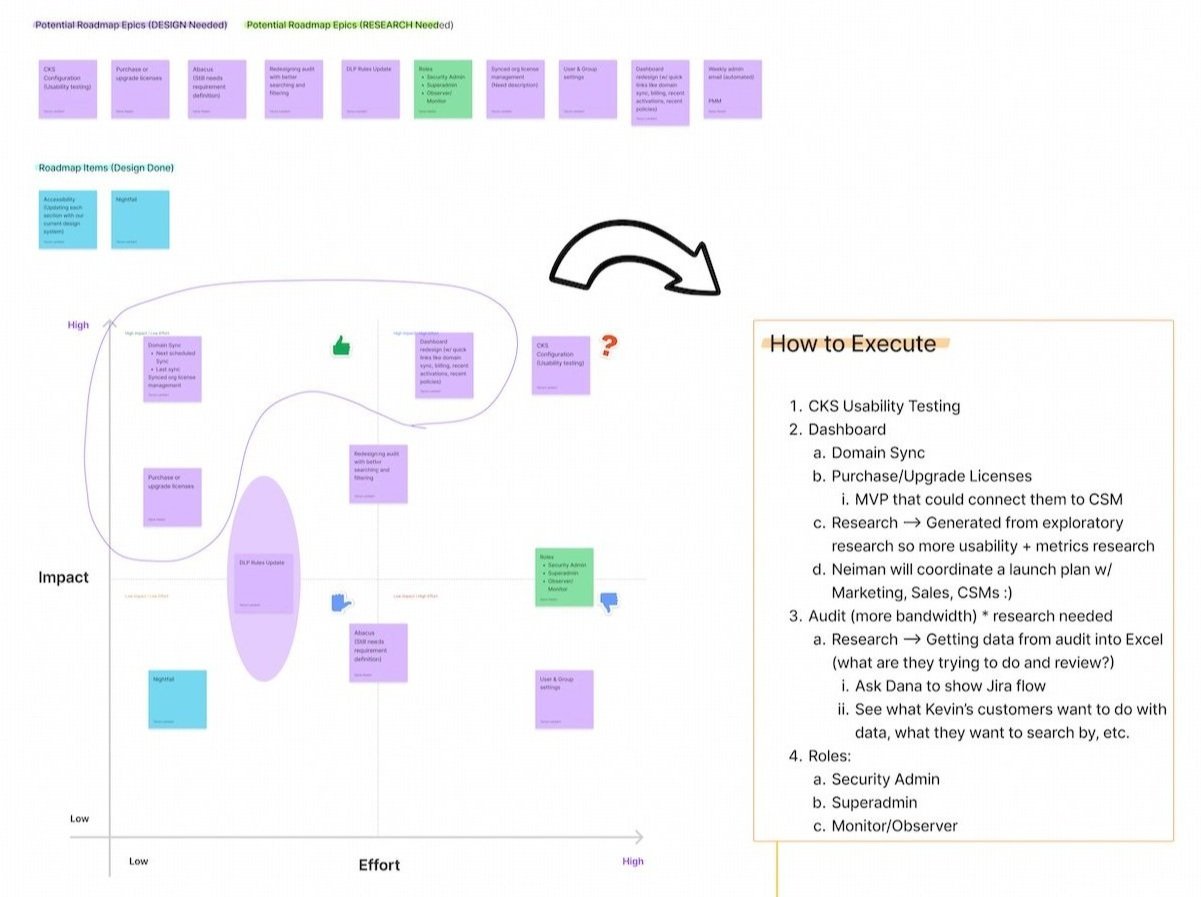

Next steps: After creating a prioritization matrix with the product manager, Dashboard was the first piece of this project that would move forward into the design phase. Along with the rest of the core project team, we began examining dashboard design options, brainstorming different ways to visualize the data to be the most helpful for security admins. We also started sketching and paper prototyping as a team.

External use of the word “policies” (to describe protected data) confused customers because other admin tools use “policies” and “rules” interchangeably.

100% of customers failed the tree test task related to finding the section of the product where they could view the last data encryption event.

One of our customer support managers mentioned that Google Workspace refers to their “rules” as “policies”, and it is one of the products our product integrates with. This could add to the confusion for our customers.

“Policies” is an internally-used term that can and should still be used internally. However, we need to rename this in customer-facing materials and products to avoid confusion.

Next steps: In collaboration with the marketing and product marketing teams, we kickstarted a larger conversation about how to refer to “policies” externally. We held an initial brainstorming session to get ideas down (in FigJam) and followed up with conversations, comments, and dot voting. Our first step is to rename the “Policies” section to “My Data” and monitor usability from there.

Many customers utilize both the Users & Groups section and the "Policies" section of the product to conduct self-audits since they do not have an Audit section yet.

When reviewing the participant paths in the tree test, users thought that they could view the last data encryption event by visiting the Audit Event Log (a future feature for the product).

During customer interviews, ~50% of participants discussed using the Users & Groups or “Policies” sections of the product to generate their own reports (through different searches and exports).

Often, these users already thought “Policies” was their Audit section.

Next steps: After creating a prioritization matrix with the product manager, Audit was listed as our second priority. We plan to do further research on Audit, including how our customers currently self-audit, to create a better Audit experience for them. Our SVP of Product & Engineering also mentioned the potential of automating the data being exported by our users, to make it easier for security admins to manage and refer back to.

For further information on findings and insights, please contact me.

Tree Test: Task #6 Pie Chart - 100% failure due to misunderstanding what “Policies” are

Tree Test: Task #6 Paths - 100% failure due to misunderstanding what “Policies” are

Affinity Map of conversations that took place during (or after) card sorts and tree tests

Research Impact

User Impact

Dashboard: Allows security admins to see a clear and quick picture of their data, making it easier to keep track of various information and lessening their mental load.

Renaming “Policies”: Aligns our product with our users’ mental models.

Understanding Audit: By better understanding how our users are self-auditing on the platform currently, we can design a valuable Audit experience to help them get the information they care about quickly and easily. The Dashboard can also play a large part in this.

Strategic Impact

After reviewing the research insights with the core team, we strategically prioritized them using a prioritization matrix to determine the best course of action for product updates. Recognizing the substantial nature of information architecture (IA), we understand that comprehensive updates can’t happen overnight. Most of the insights and design work are scheduled for implementation in Q1 and Q2 of the following year. However, by assessing our IA, we have established a clear roadmap for the next 2-3 years to support the product's growth. This approach enables us to prioritize UX ahead of Engineering, aligning our teams and ensuring that UX never becomes the bottleneck in the equation, which is part of a larger shift to get UX well ahead of Engineering.

My immediate next step was to partner with our UX Designer and our Engineering team to determine how to utilize DataDog to track the usage of newly rolled-out buttons, pages, and features in the admin console. We aligned on what data we could track in an ideal world vs. the effort for our Engineering team to implement this through DataDog. We finalized a list of three items to begin tracking upon the initial implementation of new designs.

Business Impact

While the admin tool itself doesn't directly generate revenue (it is an “overview” type tool that comes with any product purchase), the insights uncovered during card sorts and tree tests can have a significant impact on our business through upsell potential. During these sessions, customers gained insight into upcoming sections, features, integrations, and usability enhancements, which generated excitement and anticipation. Often, this was the first time they had heard about a new integration or feature. This has already created opportunities for potential upsells, which I facilitated between customers and their customer success managers. As we deploy new information and sections into the tool in the future, it will open up even more possibilities for customer self-discovery and upsells.

Integrations: Notably, our recent and upcoming integrations with popular products like Zendesk have generated significant customer interest, particularly for those who already use the integrated product. The seamless integration with tools they are already using is highly attractive.

Billing: During my interactions with customers, ~50% of them expressed enthusiasm about the newly added Billing section in the tool. This new section of the product allows easy access to product suite pricing, license usage monitoring, and hassle-free addition of licenses as needed. This streamlined process presents opportunities for quick upsells and increased product usage as we enhance self-service capabilities.

Custom branding: Originally conceived for Enterprise clients, the custom branding feature garnered unexpected attention during the research phase, with many customers discovering its availability for the first time. As we shift towards a more self-serve model, strategically placing custom branding within the navigation schema improves its discoverability, leading to potential upsell opportunities as well.

Prioritization Matrix to help us decide where to put our focus as we move forward

Meeting with team to discuss Audit (current plans, future capabilities, etc.)

Methodologies

Problem-scoping exercise with the core team

Sketching activity with UX Manager after second card sort

Updated site map after conducting card sorts and tree tests

Some of the key methodologies for this project are listed below, with explanations as to why they were chosen for this project:

Problem-scoping: Before I dive into any research project, I complete a problem-scoping exercise with relevant stakeholders. This is an opportunity to brain-dump together, really fine-tune our problem statement, and better understand our constraints, assumptions/biases, and capabilities as I plan out the research. This also helps to paint a clear picture of the project landscape so that I can determine which methodologies are appropriate for the project at hand.

Moderated Card Sorts (Internal, Open + External, Hybrid): To gather valuable insights and address customer pain points in the information architecture project, I employed a combination of stakeholder interviews and card sorting techniques.

Initially, I conducted a round of card sorting with internal stakeholders, including customer success managers and the customer support team. This allowed me to leverage their in-depth knowledge of the tool and their direct interactions with customers to identify pain points and understand the tool's usage patterns. These stakeholder interviews also helped in identifying any confusing or poorly labeled items within the tool, which could be addressed before the customer card sorting phase.

Subsequently, I conducted a customer card sort, using the insights gathered from the initial round of card sorting with stakeholders. In this hybrid approach, customers had the flexibility to name the groups themselves, facilitating a more customer-centric perspective and ensuring their preferences were considered in the organization of categories.

By combining stakeholder interviews and customer card sorting, I was able to incorporate both internal expertise and user perspectives, resulting in an informed and user-centered information architecture design.

Tree Tests: To validate and enhance the organization and labeling of the information architecture, I conducted a tree test following the card sorting activities, using our updated sitemap. This allowed me to evaluate the findability and navigational structure of the identified categories, ensuring an optimized user experience. By simulating realistic user scenarios and measuring the effectiveness of the information architecture, any usability issues were identified and addressed, resulting in an improved overall user experience.

Structural Argument: To strengthen our problem-scoping exercise and evaluate our proposed information architecture (IA) options, I facilitated a "Structural Argument" activity inspired by Abby Covert's article Structural Arguments for Information Architecture, where she discusses the importance of creating effective structural arguments when evaluating and comparing different options for IA. By utilizing a template I created on FigJam, we engaged as a team to reflect on our navigation choices, enhance our storytelling, and present our IA proposal with confidence. The activity also allowed us to collectively share our learnings throughout the project, identify additional constraints, and highlight areas where further information, such as available metadata for dashboard design, was needed. (The template is publicly available on my Figma Community page!)

Sketching: Though I’m a UX Researcher, I have a visual design/illustration background. Drawing upon that, sketching sessions played a crucial role in our information architecture project. Through collaborative sketching sessions, we gained a deeper understanding of research insights and seamlessly transitioned from the research phase to design. Sketching facilitated visual exploration, ideation, and effective communication of complex concepts, while promoting active participation and generating innovative design solutions.

Information Architecture (IA) Structural Argument activity (based on Abby Covert’s article)

Storyboard showing the billing use case (attached to our Jira ideas portal)

Storyboard showing the license use case (attached to our Jira ideas portal)

Reflections & Learnings

Recruiting customers (rather than representative users) can be difficult without a streamlined process in place. Exhaust all possible avenues!

Connect with customer success and customer support teams! Be borderline annoying 😂

Talk to your product manager! What customers have they connected with that use the product?

Talk to your UX teammates — how have they recruited customers before? Do they know any customers that might be open to meeting for an incentivized research activity?

Learn how to navigate Amplitude (yes, I figured out how to use Amplitude for this project haha)!

Documentation is vital to project success! In a challenging turn of events, our Product Manager (PM) was laid off just as the project reached its conclusion. Fortunately, due to our prior completion of the Structural Argument activity, we had comprehensive documentation spanning the entire project lifecycle. This not only safeguarded our project's integrity but also facilitated a smooth transition for our new PM, ensuring minimal disruption to our momentum.

The different workflows between product research and generative research are so interesting. Product research involves close collaboration and frequent check-ins with the core team, often leading to more meetings, as it aims to gather insights and feedback for specific product development. In contrast, generative research can feel more independent, with periods of individual work interspersed with stakeholder connections and collaborative efforts.

“I would think Rules are maybe what triggers what is permissible to send out and what is not? Versus Policies would be more about maybe the structure of things?”